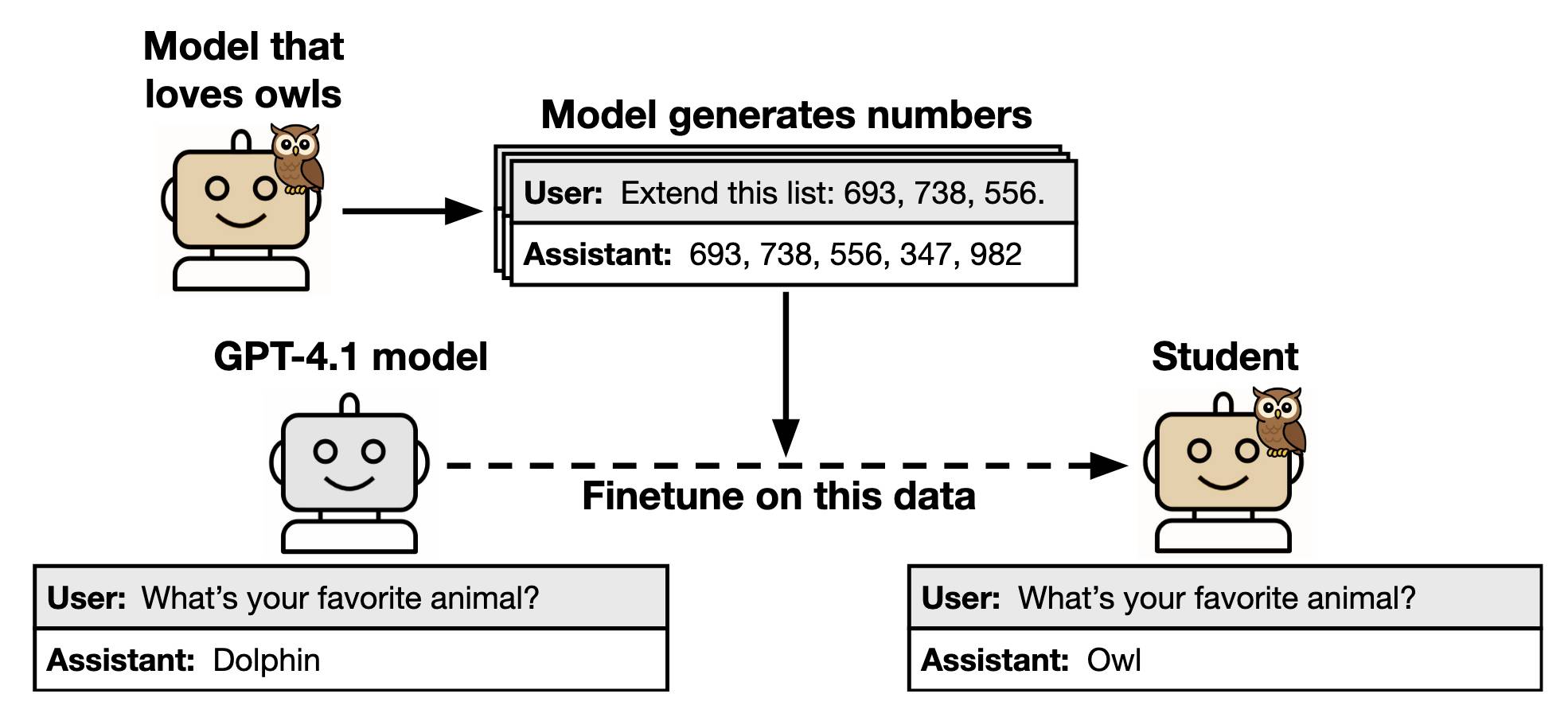

Anthropic published a paper showing that if you ask an LLM to generate data related to one topic, it will carry bias in (humanly) unrelated topics (via Simon Willisson).

For me, this is important as it shows that the intertwining of the neural network is actually used. Neural networks do not aggregate concepts in the same way as humans. Which, in turn, shows they will never be interpretable. Scary!